Empathie: Reducing Friction When Completing App Store Reviews for Users

Prior to this project, there was no established system to prompt users to review the Empathie app. I designed an extensible, robust system that utilized user data and worked within a particularly constrained review system. Although initially rejected, I was able to assert the importance of a more robust system to the team, as well as help rearrange content according to what users were most likely to rate.

Role

Product Designer

Timeline

July ‘22 - January ‘23

Core Responsibilities

Product design, product strategy, UX research

Problem

Users have a high activation barrier to leave reviews and view review requests as a disruption to their time.

Prior to this project, Empathie didn’t have an established stream to request user reviews, which is imperative in gaining traction for Empathie, especially in its initial launch. However, users don’t want to be asked to review - it makes them feel uncomfortable and it often interrupts them during other tasks.

Research - Getting to know the lay of the land

Users are inconvenienced by review requests, even when they have positive sentiments towards the app they are using.

We conducted a survey to scope general sentiments towards completing review requests and found that users:

dislike being interrupted in the middle of an action or task,

do not want to be asked to review an app they are unfamiliar with, and

do not want to complete review requests even when they enjoy using the app.

Goal

Encourage users to view leaving reviews as a helpful, altruistic act for other people like them

Because of the overwhelming activation barrier against completing review requests, we need to approach users at a time where they are receiving uniquely helpful insights. Altruistic acts, such as knowledge-sharing valuable information, are beneficial to both the well-being of the individual and of Empathie.

Constraints

Due to the constraints of the App Store and Google Play Store, we could only design when the review request would appear.

We were not able to modify the visual design or copy of the review request - so our only alterable property was the timing of the request. We mapped out three potential entry points within the app, aiming to prompt users when they would be receiving uniquely helpful insights.

Mapping out Empathie user flow and identifying three entry points with Cassidy Cheng, Product Manager @ Empathie

Entry Point 1/3

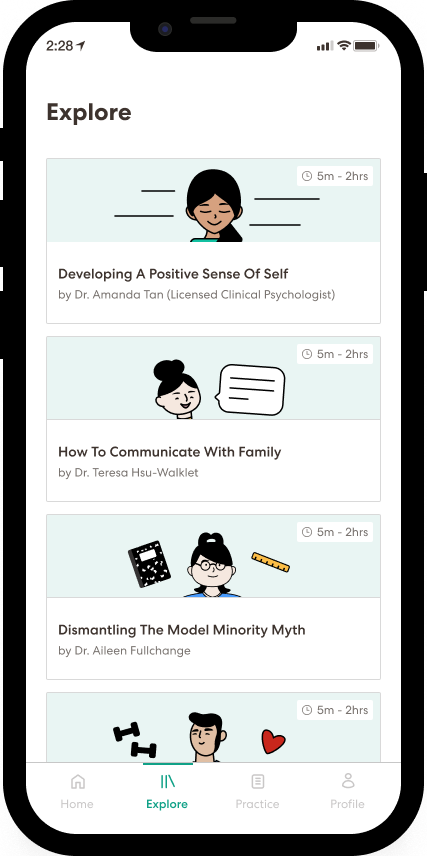

Learning modules are bite-sized, interactive learning videos about a mental wellness topic

Entry Point 2/3

Activities are actionable exercises to help manage difficult feelings

Entry Point 3/3

Mood check-ins help users name and describe their thoughts and feelings

Investigation - Usability tests

In a small sample, users felt more motivated to rate after completing a mood check-in and a learning module compared to completing an activity.

In order to be agile for the launch of Empathie, we conducted a live usability test on each of the entry points with a small sample group of six participants. With this data, we re-evaluated each entry point.

Entry Point 1/3

Learning modules performed well, but would be resource intensive to code review prompts for each module, so we needed to identify the modules with the highest return

While learning modules made sense, given that is the bulk of Empathie’s distinctive POC-centric content, discussing with the engineering team informed us that this would be resource intensive.

Entry Point 2/3

Activities scored lower than expected, but the small sample size of the test prompted further investigation into how users valued or didn’t value activities

Although activities did not score as well as the other two entry points, we had a hunch that this might have been skewed by how small the sample size was and the nature of a live usability test. I wanted to investigate whether the previous beta data, which included activities, was consistent with our live usability test.

Entry Point 3/3

Utilizing mood check-ins more effectively would be unethical, so we put less of an emphasis on this point

After further consideration, requesting a review after a negative mood check-in could result in frustration for the user. However, isolating review requests to only after positive check ins would be unethical. While there is still value in requesting after a mood check-in, we decided that it would be beneficial to put less of emphasis on this entry point.

Investigation - Learning Modules Usability Test

Users’ increased motivation to rate a learning module depended on the novelty of the insights they gained.

We tested each learning module individually on UsabilityHub to avoid cognitive overload. Modules that users noted had novel information, such as the Model Minority Myth learning module, had a greater percentage of completed requests and average rating score. The insights from the tests also caused our content team to rearrange the content according to what modules performed best.

“Normally I find [the request] annoying, but in this case the app seems very useful and potentially impactful to a broad demographic”

“I rate it five because this application raises awareness about [my] behavior and it’s really informative and accurate”

Investigation - Past Beta Data

Users find more value in activities and are more willing to pay if they have tried at least two activities

More rigorous and realistic data from the most recent beta indicated that users found positive value in the activities. In a post-beta survey, users who tried at least two activities reported greater helpfulness of activities and average willingness to pay to use Empathie to reach their emotional wellness goals than users who did not. This validated our hunch that activities were more impactful to users than our small sample size test initially let on.

Team Alignment

Engineering initially rejected our proposal as it was too difficult to do, but approved our proposal after our tests demonstrated it had high return value

Initially, engineering declined our entry point proposal due to limited time and resources. In response, we emphasized the high return value our tests demonstrated. Upon reconsideration, the engineering team agreed to proceed with our proposal. Additionally, engineering also agreed to time review prompts to appear after users become more familiar with the app, rather than right after their initial interaction with the entry points.

Solution

An extensible weighted point value system that prompts users when they are most likely receiving uniquely helpful insights

I created a point value system that would prompt users after reaching a certain point threshold. Based on past beta data, data from our usability tests, and the value propositions of Empathie, each entry point was weighted differently. As Empathie’s bread and butter and the entry point that tested the best, learning modules were weighted most heavily, followed by activities and mood check-in.

Vision of the future

Implementing live data to allow users to share the positive impact and insights from their newfound knowledge

Sharing knowledge with others feels most rewarding when you feel like you have something to say. Having user data from a live product rather than a beta would give a more accurate idea of and can further optimize what is most impactful and insightful for users. In this project, we investigated the scope of prompting the first review request, but increased data would help identify what would be ideal for subsequent requests.

Conclusion

Improving my ability to evangelize an idea cross-functionally, while being sure to challenge my own initial data and beliefs

I learned the importance of believing in the value of my designs, even if my initial proposal is rejected. However, it was also an essential lesson to question how the data I collected could be skewed by testing constraints and to seek more accurate data from previous projects. While I believed that prompting users after a positive mood entry would be impactful, ethical concerns regarding their privacy caused me to alter how requests from that entry point would be incorporated. Overall, it was quite interesting working on a project that had many constraints and involved encouraging a behavior users typically feel negatively toward.